Tags

AI (8) sewing (7) recycling (7) bags (7) software engineering (5) tech debt (4) software design (4) prompt engineering (4) ecommerce (4) Product Management (4) OpenAI (4) Empirical (4) context (3) architecture (3) Research (3) Learning (3) Hexagons (3) Embeddings (3) Board Game (3) 3-d Printing (3) twitter bootstrap (2) natural language (1) github pages (1) gardening (1) TypeScript (1) Streamlit (1) React (1) Modular AI (1) Makers Journey (1) Machine Learning (1) MVC (1) LoRA (1) Ionic (1) Heartbeat (1) Flan-T5 (1) Firebase (1) DaisyUI (1) DIY (1) Cosine Search (1) Context (1) Azure DevOps (1)3-d Printing:

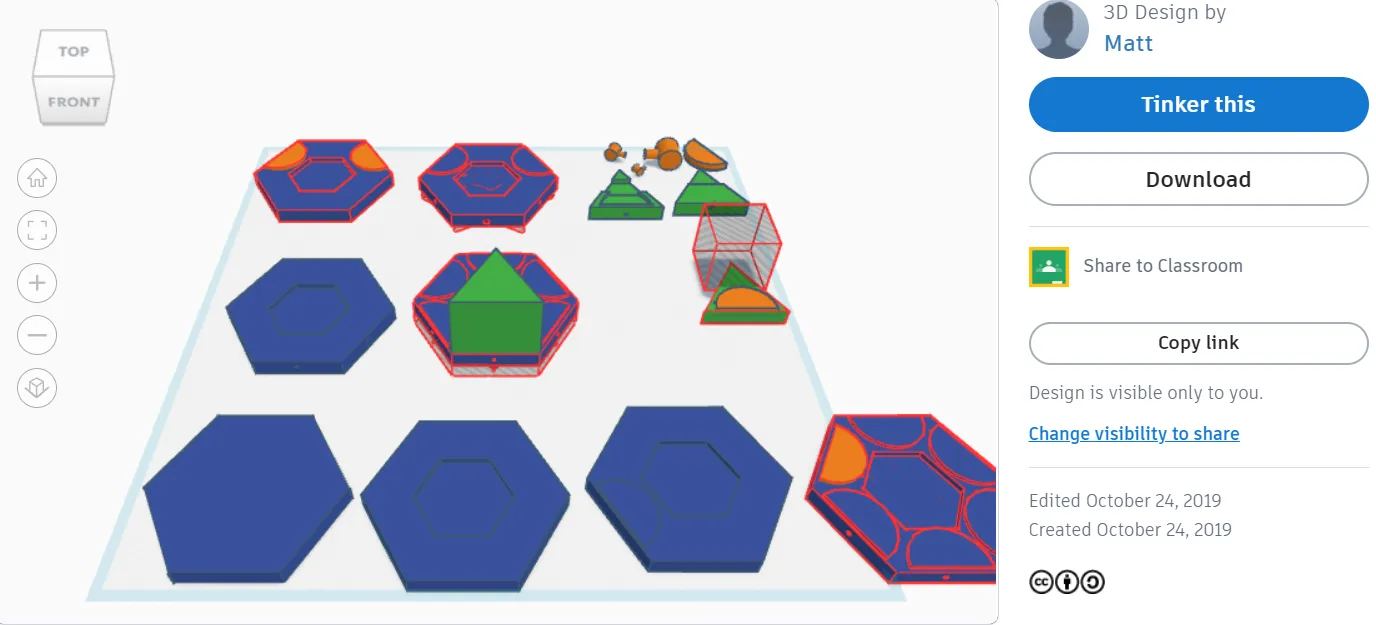

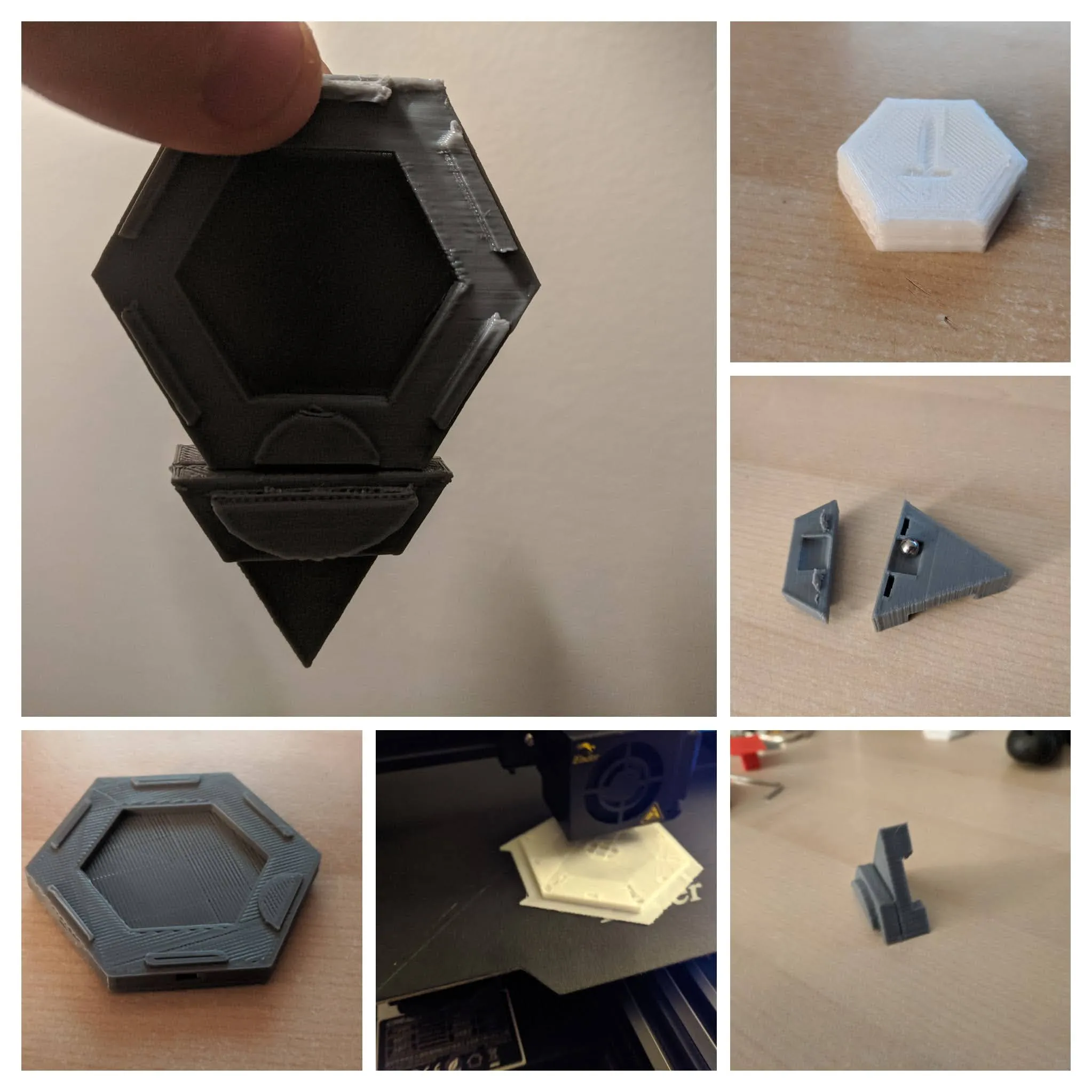

Finalizing a design that works

AI:

Hot-Swappable AI: Building Modular Memory for LLMs

I set out to see if a model could learn something new without retraining. The result is a working prototype that uses virtual tokens and LoRA adapters to hot-swap micro-models in real time through a Streamlit interface.

Heartbeat AI — The Always-On Assistant

Most AI tools today wait for you to ask a question. Heartbeat AI doesn't.

Experiment: Expanding Context with Dynamic Virtual Tokens

Lately, I've been exploring an idea that sits somewhere between RAG (Retrieval-Augmented Generation) and fine-tuning, but with a twist: what if we could dynamically expand a model's “memory” by injecting data as virtual tokens instead of through traditional context windows or retraining?

Beyond Context Windows: Building an LLM with Injectable Layers

What if an AI didn’t need to be fed massive prompts every time it responded? What if context wasn’t passed in—but *part* of the model itself?

Getting to the Heart of the Ask: Designing AI for Intent Clarity

When users talk to AI, they speak like humans. That’s the whole point. But the systems powering those conversations—whether it’s Azure DevOps, a database, or a custom app—speak a different language. This is where intent requests come in.

Rolling My Own: Why I Chose Firebase for Embeddings

As I was building Empirical, I realized that embeddings could be the key to maintaining context in conversations and interactions. But the question was: where do I store them?

Exploring Existing Embedding Solutions: Do I Really Need One?

Once I wrapped my head around embeddings, I started looking into how existing products were implementing them.

Discovering Embeddings: A Game-Changer for Contextual Awareness

I was reading up on natural language processing (NLP) and came across something called “embeddings.”

Azure DevOps:

Getting to the Heart of the Ask: Designing AI for Intent Clarity

When users talk to AI, they speak like humans. That’s the whole point. But the systems powering those conversations—whether it’s Azure DevOps, a database, or a custom app—speak a different language. This is where intent requests come in.

Board Game:

Finalizing a design that works

Context:

Discovering Embeddings: A Game-Changer for Contextual Awareness

I was reading up on natural language processing (NLP) and came across something called “embeddings.”

Cosine Search:

Rolling My Own: Why I Chose Firebase for Embeddings

As I was building Empirical, I realized that embeddings could be the key to maintaining context in conversations and interactions. But the question was: where do I store them?

DIY:

Rolling My Own: Why I Chose Firebase for Embeddings

As I was building Empirical, I realized that embeddings could be the key to maintaining context in conversations and interactions. But the question was: where do I store them?

DaisyUI:

Getting Started with Empirical: Setting the Foundation

I officially started working on Empirical, and things are shaping up! I'm building it with Ionic React and TypeScript

Embeddings:

Rolling My Own: Why I Chose Firebase for Embeddings

As I was building Empirical, I realized that embeddings could be the key to maintaining context in conversations and interactions. But the question was: where do I store them?

Exploring Existing Embedding Solutions: Do I Really Need One?

Once I wrapped my head around embeddings, I started looking into how existing products were implementing them.

Discovering Embeddings: A Game-Changer for Contextual Awareness

I was reading up on natural language processing (NLP) and came across something called “embeddings.”

Empirical:

Rolling My Own: Why I Chose Firebase for Embeddings

As I was building Empirical, I realized that embeddings could be the key to maintaining context in conversations and interactions. But the question was: where do I store them?

Exploring Existing Embedding Solutions: Do I Really Need One?

Once I wrapped my head around embeddings, I started looking into how existing products were implementing them.

Discovering Embeddings: A Game-Changer for Contextual Awareness

I was reading up on natural language processing (NLP) and came across something called “embeddings.”

Getting Started with Empirical: Setting the Foundation

I officially started working on Empirical, and things are shaping up! I'm building it with Ionic React and TypeScript

Firebase:

Rolling My Own: Why I Chose Firebase for Embeddings

As I was building Empirical, I realized that embeddings could be the key to maintaining context in conversations and interactions. But the question was: where do I store them?

Flan-T5:

Hot-Swappable AI: Building Modular Memory for LLMs

I set out to see if a model could learn something new without retraining. The result is a working prototype that uses virtual tokens and LoRA adapters to hot-swap micro-models in real time through a Streamlit interface.

Heartbeat:

Heartbeat AI — The Always-On Assistant

Most AI tools today wait for you to ask a question. Heartbeat AI doesn't.

Hexagons:

Finalizing a design that works

Ionic:

Getting Started with Empirical: Setting the Foundation

I officially started working on Empirical, and things are shaping up! I'm building it with Ionic React and TypeScript

Learning:

Rolling My Own: Why I Chose Firebase for Embeddings

As I was building Empirical, I realized that embeddings could be the key to maintaining context in conversations and interactions. But the question was: where do I store them?

Exploring Existing Embedding Solutions: Do I Really Need One?

Once I wrapped my head around embeddings, I started looking into how existing products were implementing them.

Discovering Embeddings: A Game-Changer for Contextual Awareness

I was reading up on natural language processing (NLP) and came across something called “embeddings.”

LoRA:

Hot-Swappable AI: Building Modular Memory for LLMs

I set out to see if a model could learn something new without retraining. The result is a working prototype that uses virtual tokens and LoRA adapters to hot-swap micro-models in real time through a Streamlit interface.

Machine Learning:

Hot-Swappable AI: Building Modular Memory for LLMs

I set out to see if a model could learn something new without retraining. The result is a working prototype that uses virtual tokens and LoRA adapters to hot-swap micro-models in real time through a Streamlit interface.

Makers Journey:

Hot-Swappable AI: Building Modular Memory for LLMs

I set out to see if a model could learn something new without retraining. The result is a working prototype that uses virtual tokens and LoRA adapters to hot-swap micro-models in real time through a Streamlit interface.

Modular AI:

Hot-Swappable AI: Building Modular Memory for LLMs

I set out to see if a model could learn something new without retraining. The result is a working prototype that uses virtual tokens and LoRA adapters to hot-swap micro-models in real time through a Streamlit interface.

OpenAI:

Rolling My Own: Why I Chose Firebase for Embeddings

As I was building Empirical, I realized that embeddings could be the key to maintaining context in conversations and interactions. But the question was: where do I store them?

Exploring Existing Embedding Solutions: Do I Really Need One?

Once I wrapped my head around embeddings, I started looking into how existing products were implementing them.

Discovering Embeddings: A Game-Changer for Contextual Awareness

I was reading up on natural language processing (NLP) and came across something called “embeddings.”

Getting Started with Empirical: Setting the Foundation

I officially started working on Empirical, and things are shaping up! I'm building it with Ionic React and TypeScript

React:

Getting Started with Empirical: Setting the Foundation

I officially started working on Empirical, and things are shaping up! I'm building it with Ionic React and TypeScript

Research:

Hot-Swappable AI: Building Modular Memory for LLMs

I set out to see if a model could learn something new without retraining. The result is a working prototype that uses virtual tokens and LoRA adapters to hot-swap micro-models in real time through a Streamlit interface.

Rolling My Own: Why I Chose Firebase for Embeddings

As I was building Empirical, I realized that embeddings could be the key to maintaining context in conversations and interactions. But the question was: where do I store them?

Exploring Existing Embedding Solutions: Do I Really Need One?

Once I wrapped my head around embeddings, I started looking into how existing products were implementing them.

Streamlit:

Hot-Swappable AI: Building Modular Memory for LLMs

I set out to see if a model could learn something new without retraining. The result is a working prototype that uses virtual tokens and LoRA adapters to hot-swap micro-models in real time through a Streamlit interface.

TypeScript:

Getting Started with Empirical: Setting the Foundation

I officially started working on Empirical, and things are shaping up! I'm building it with Ionic React and TypeScript

architecture:

Heartbeat AI — The Always-On Assistant

Most AI tools today wait for you to ask a question. Heartbeat AI doesn't.

Experiment: Expanding Context with Dynamic Virtual Tokens

Lately, I've been exploring an idea that sits somewhere between RAG (Retrieval-Augmented Generation) and fine-tuning, but with a twist: what if we could dynamically expand a model's “memory” by injecting data as virtual tokens instead of through traditional context windows or retraining?

Beyond Context Windows: Building an LLM with Injectable Layers

What if an AI didn’t need to be fed massive prompts every time it responded? What if context wasn’t passed in—but *part* of the model itself?

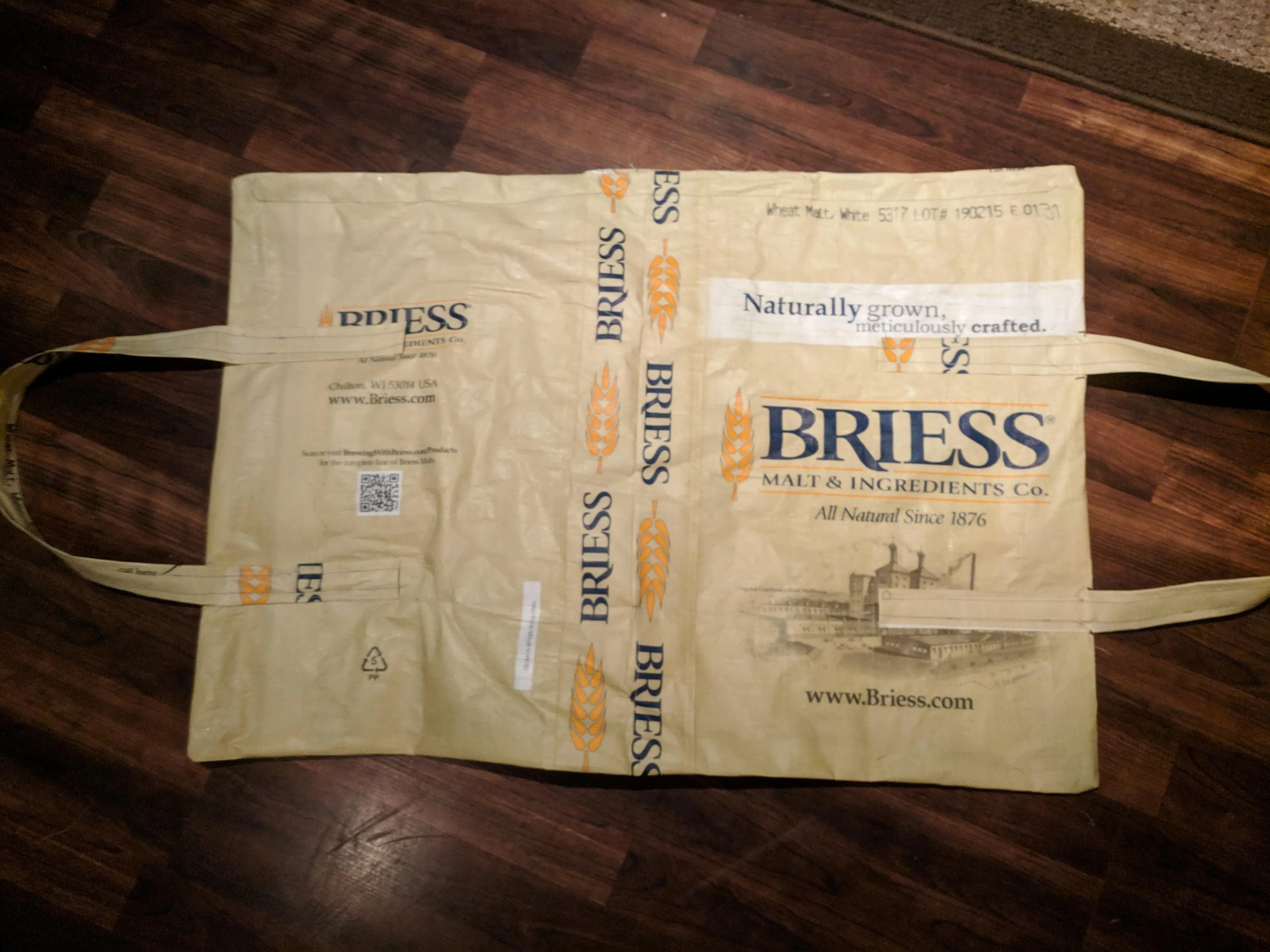

bags:

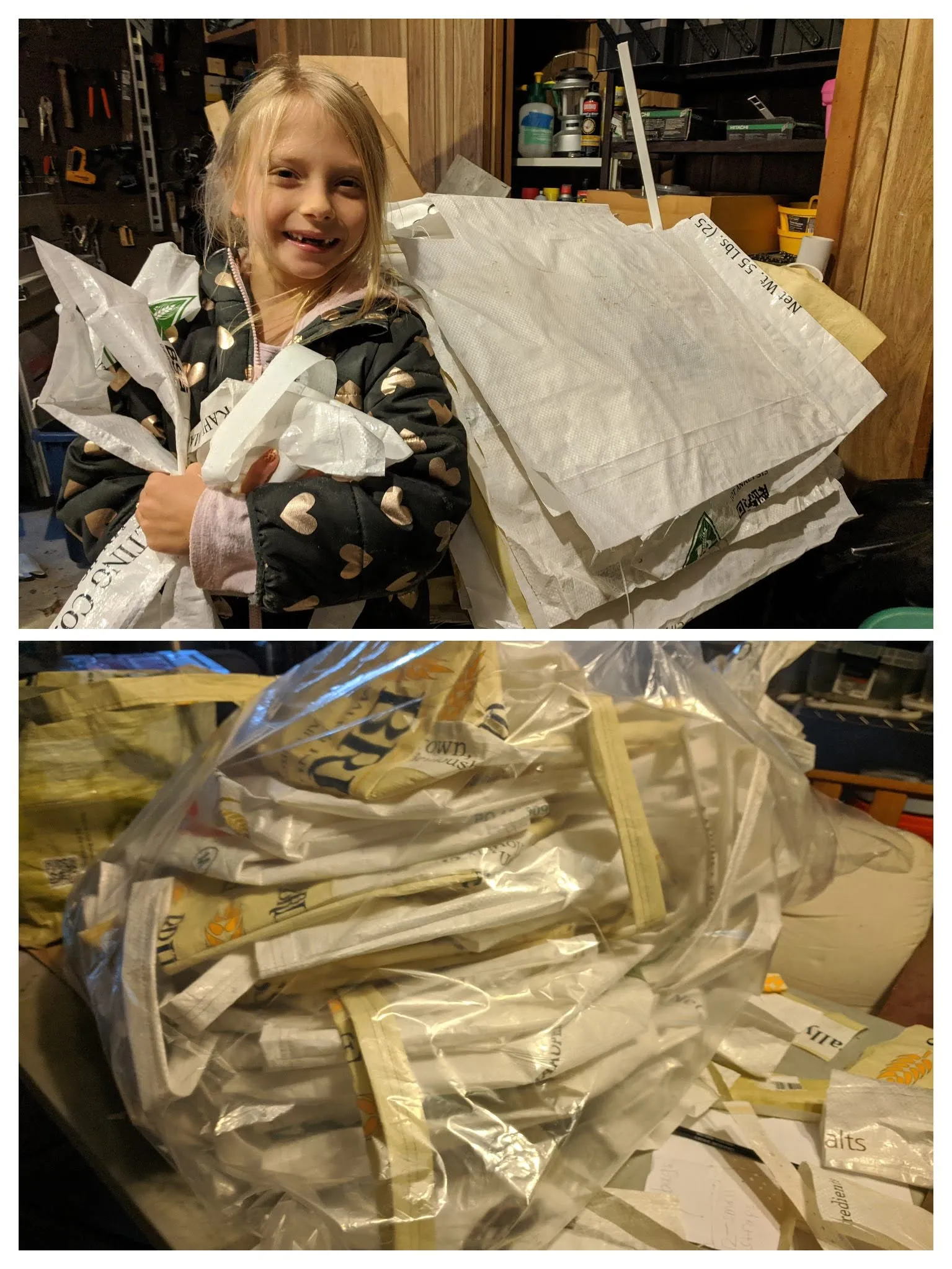

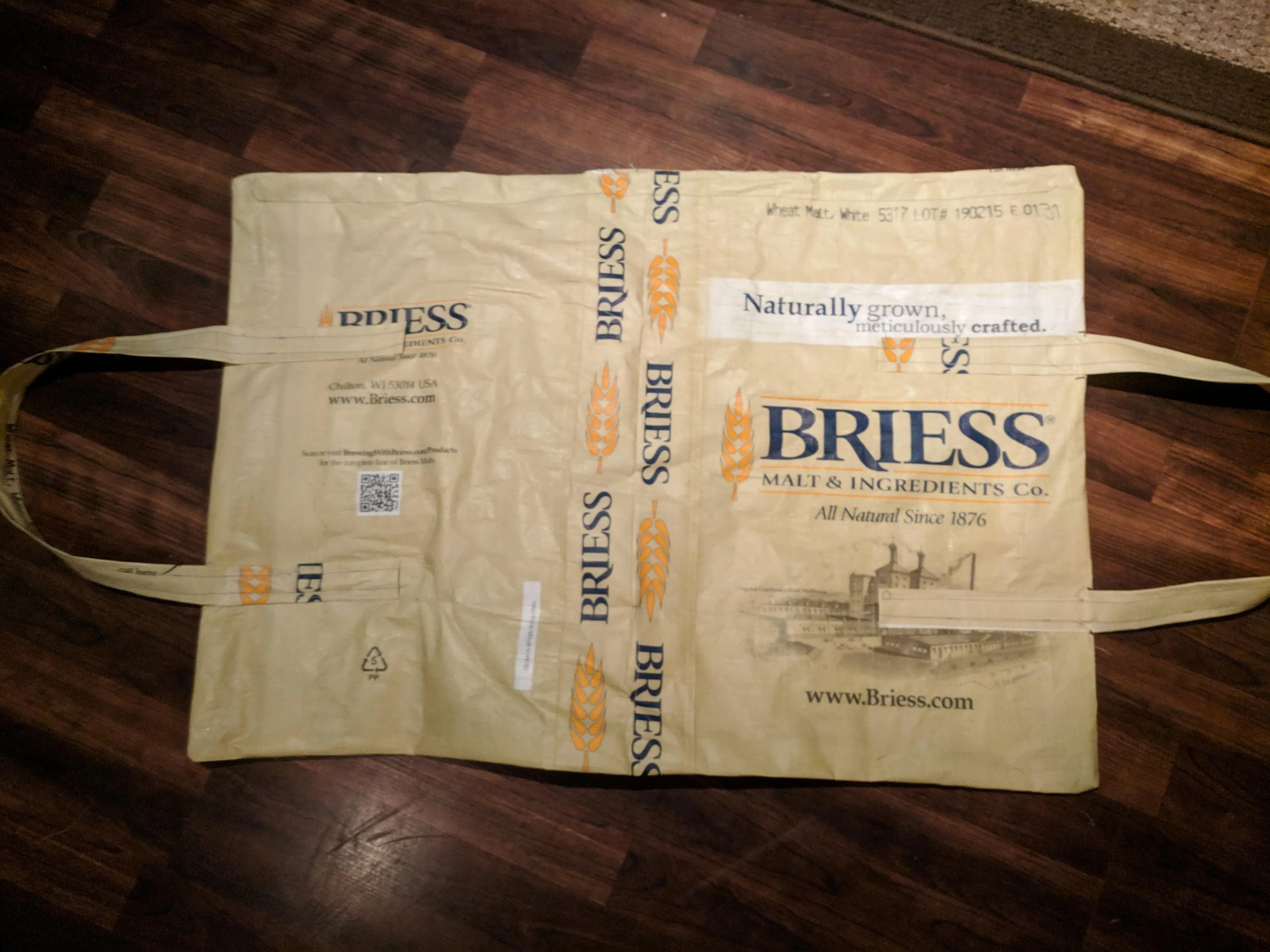

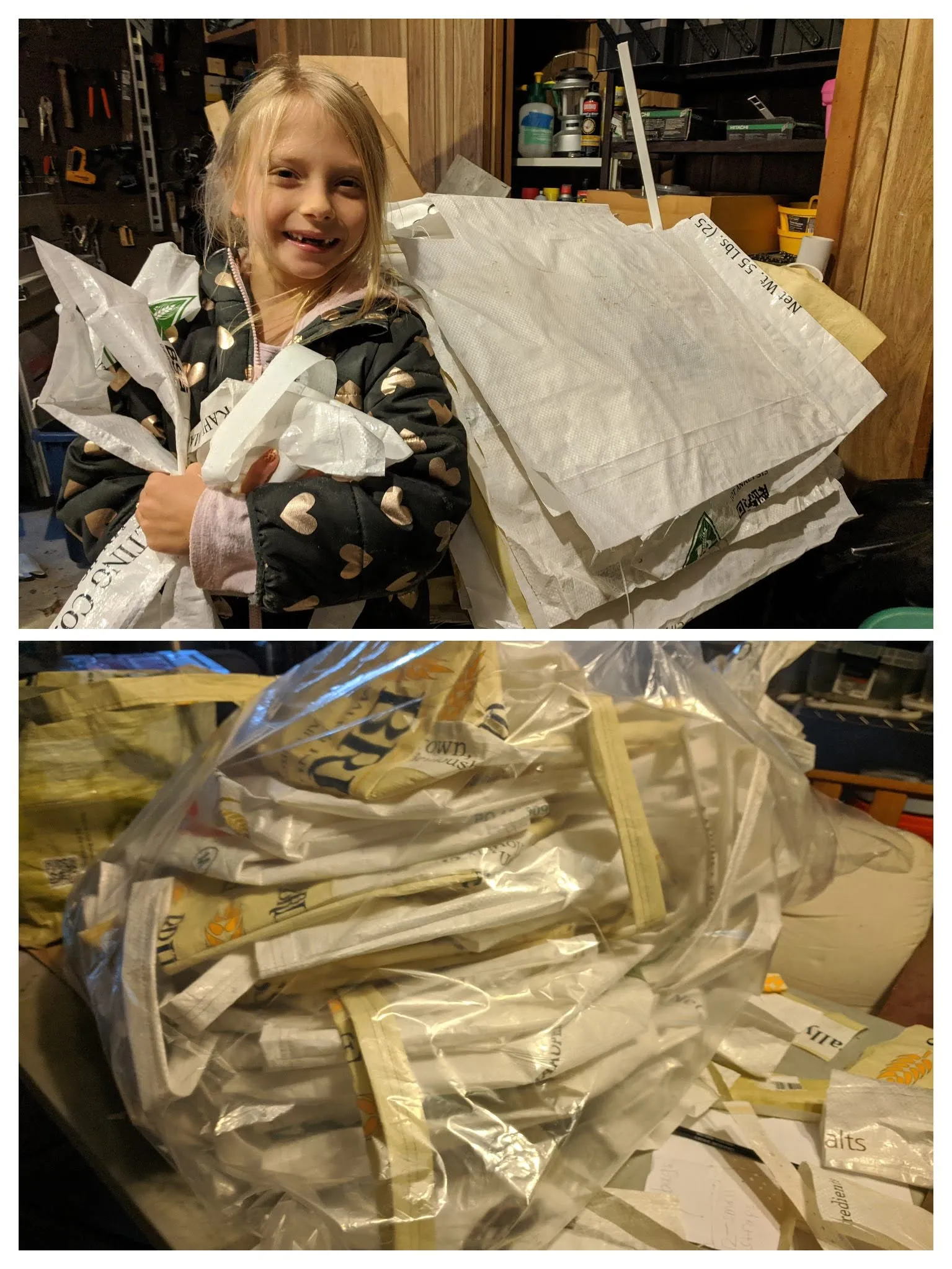

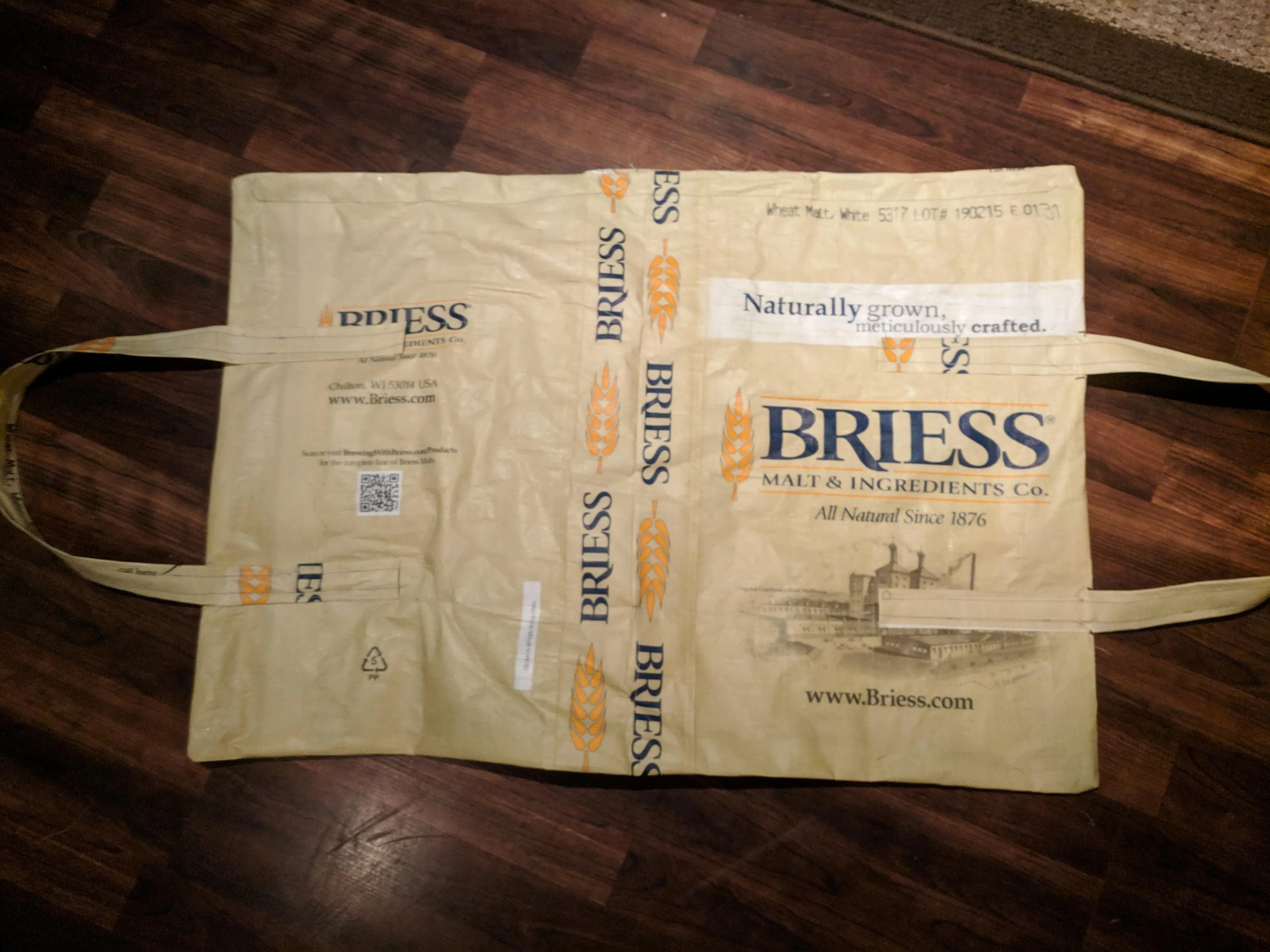

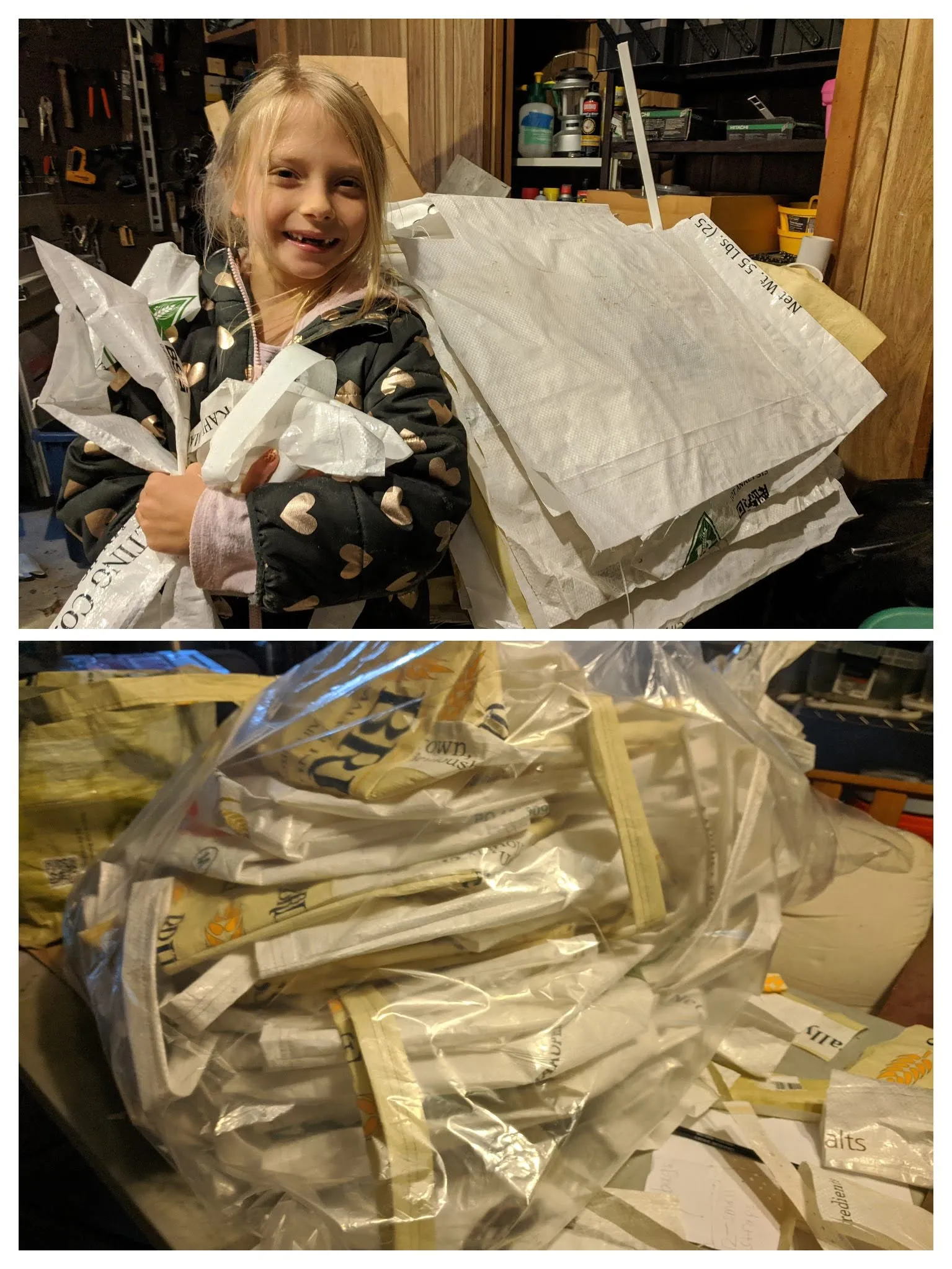

A Large Order for Upcycled Bags

Header Brewer Interested In My Bags

Mass Produced Bags

I was able to finish my "mass production" of upcycled reusable grocery bags. There are 20 bags in total. On average it took me about 1 1/2 hours per bag.

context:

Heartbeat AI — The Always-On Assistant

Most AI tools today wait for you to ask a question. Heartbeat AI doesn't.

Experiment: Expanding Context with Dynamic Virtual Tokens

Lately, I've been exploring an idea that sits somewhere between RAG (Retrieval-Augmented Generation) and fine-tuning, but with a twist: what if we could dynamically expand a model's “memory” by injecting data as virtual tokens instead of through traditional context windows or retraining?

Beyond Context Windows: Building an LLM with Injectable Layers

What if an AI didn’t need to be fed massive prompts every time it responded? What if context wasn’t passed in—but *part* of the model itself?

github pages:

Using tags to organize GitHub pages posts

I would like to figure out how to organize and group these posts better. The first thing that came to mind was tagging posts.

natural language:

Getting to the Heart of the Ask: Designing AI for Intent Clarity

When users talk to AI, they speak like humans. That’s the whole point. But the systems powering those conversations—whether it’s Azure DevOps, a database, or a custom app—speak a different language. This is where intent requests come in.

prompt engineering:

Heartbeat AI — The Always-On Assistant

Most AI tools today wait for you to ask a question. Heartbeat AI doesn't.

Experiment: Expanding Context with Dynamic Virtual Tokens

Lately, I've been exploring an idea that sits somewhere between RAG (Retrieval-Augmented Generation) and fine-tuning, but with a twist: what if we could dynamically expand a model's “memory” by injecting data as virtual tokens instead of through traditional context windows or retraining?

Beyond Context Windows: Building an LLM with Injectable Layers

What if an AI didn’t need to be fed massive prompts every time it responded? What if context wasn’t passed in—but *part* of the model itself?

Getting to the Heart of the Ask: Designing AI for Intent Clarity

When users talk to AI, they speak like humans. That’s the whole point. But the systems powering those conversations—whether it’s Azure DevOps, a database, or a custom app—speak a different language. This is where intent requests come in.

recycling:

A Large Order for Upcycled Bags

Header Brewer Interested In My Bags

Mass Produced Bags

I was able to finish my "mass production" of upcycled reusable grocery bags. There are 20 bags in total. On average it took me about 1 1/2 hours per bag.

sewing:

A Large Order for Upcycled Bags

Header Brewer Interested In My Bags

Mass Produced Bags

I was able to finish my "mass production" of upcycled reusable grocery bags. There are 20 bags in total. On average it took me about 1 1/2 hours per bag.

software design:

Heartbeat AI — The Always-On Assistant

Most AI tools today wait for you to ask a question. Heartbeat AI doesn't.

Experiment: Expanding Context with Dynamic Virtual Tokens

Lately, I've been exploring an idea that sits somewhere between RAG (Retrieval-Augmented Generation) and fine-tuning, but with a twist: what if we could dynamically expand a model's “memory” by injecting data as virtual tokens instead of through traditional context windows or retraining?

Beyond Context Windows: Building an LLM with Injectable Layers

What if an AI didn’t need to be fed massive prompts every time it responded? What if context wasn’t passed in—but *part* of the model itself?

Getting to the Heart of the Ask: Designing AI for Intent Clarity

When users talk to AI, they speak like humans. That’s the whole point. But the systems powering those conversations—whether it’s Azure DevOps, a database, or a custom app—speak a different language. This is where intent requests come in.

software engineering:

Using tags to organize GitHub pages posts

I would like to figure out how to organize and group these posts better. The first thing that came to mind was tagging posts.

.jpg)